▶ Mock Test link : https://beta.kodekloud.com/user/courses/udemy-labs-certified-kubernetes-administrator-with-practice-tests

Sign In | KodeKloud

Welcome to KodeKloud By signing up you agree to our privacy policy

beta.kodekloud.com

▶ 시험 환경설정

(1) 공식문서 열어주기

=> https://kubernetes.io/docs/home/

(2) 자동완성 설정해주기 - tab 누를시 적용되도록

#자동완성

source <(kubectl completion bash) # set up autocomplete in bash into the current shell, bash-completion package should be installed first.

echo "source <(kubectl completion bash)" >> ~/.bashrc # add autocomplete permanently to your bash shell.

#별칭

alias k=kubectl

complete -o default -F __start_kubectl k

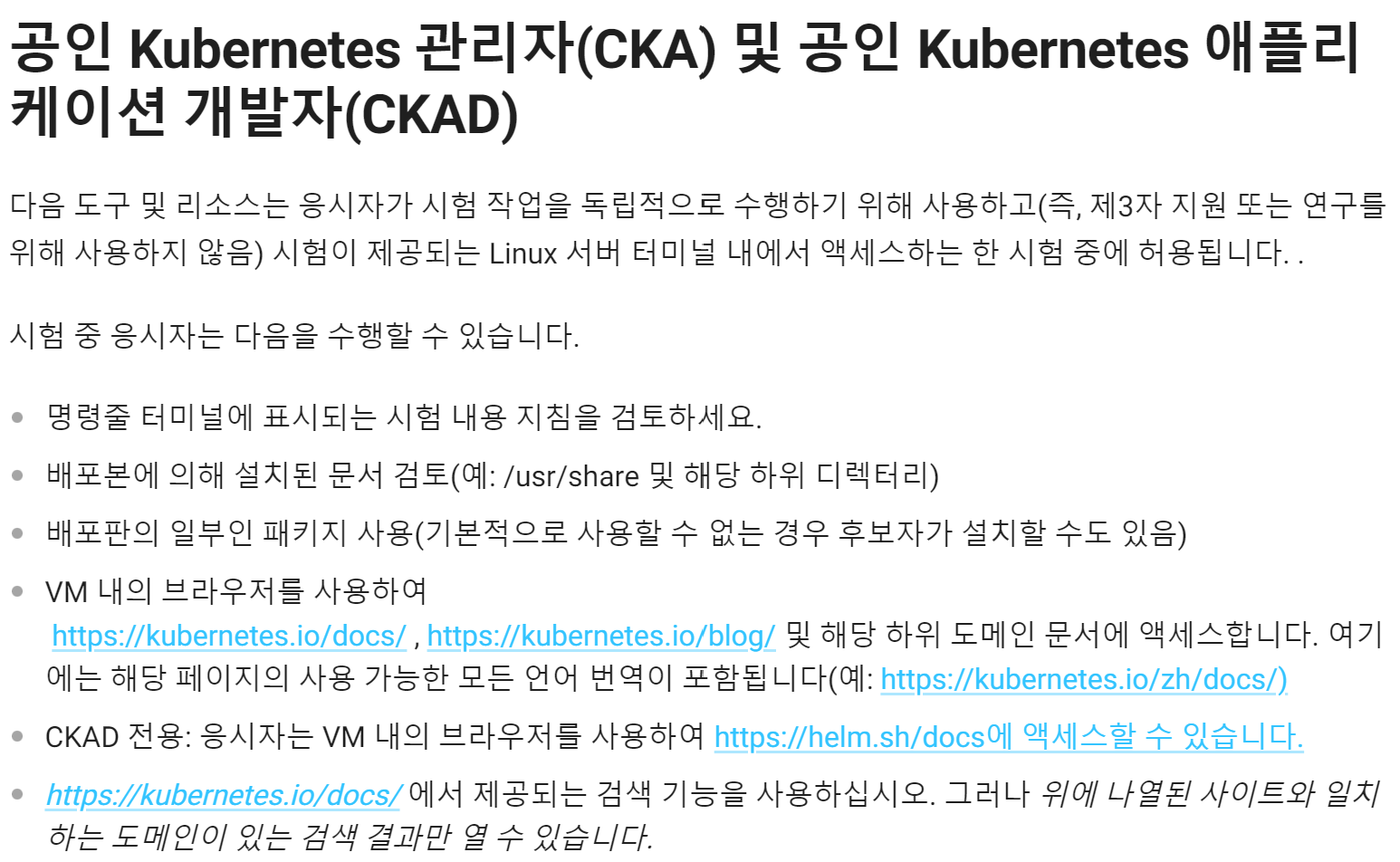

▶ 접속 가능한 참고 사이트

https://kubernetes.io/docs/home/

ex ) https://kubernetes.io/docs/reference/kubectl/quick-reference/

kubectl Quick Reference

This page contains a list of commonly used kubectl commands and flags. Note: These instructions are for Kubernetes v1.30. To check the version, use the kubectl version command. Kubectl autocomplete BASH source <(kubectl completion bash) # set up autocomple

kubernetes.io

01. Create a new service account with the name pvviewer. Grant this Service account access to list all PersistentVolumes in the cluster by creating an appropriate cluster role called pvviewer-role and ClusterRoleBinding called pvviewer-role-binding.

Next, create a pod called pvviewer with the image: redis and serviceAccount: pvviewer in the default namespace.

문제 유형 : 서비스 계정 생성 + 권한부여 + 파드생성

▶ 과정

#serviceaccount 생성

k create serviceaccount pvviewer

#k get sa

NAME SECRETS AGE

default 0 43m

pvviewer 0 3s

#clusterrole 생성

k create clusterrole --help

#kubectl create clusterrole pod-reader --verb=get,list,watch --resource=pods

kubectl create clusterrole pvviewer-role --verb=list --resource=persistentvolumes

#k get clusterrole pvviewer-role

NAME CREATED AT

pvviewer-role 2024-05-08T04:49:24Z

#clusterrole binding 생성

k create clusterrolebinding --help

#kubectl create clusterrolebinding cluster-admin --clusterrole=cluster-admin

--user=user1 --user=user2 --group=group1

kubectl create clusterrolebinding pvviewer-role-binding --clusterrole=pvviewer-role --serviceaccount=default:pvviewer

#k describe clusterrolebindings pvviewer-role-binding

Name: pvviewer-role-binding

Labels: <none>

Annotations: <none>

Role:

Kind: ClusterRole

Name: pvviewer-role

Subjects:

Kind Name Namespace

---- ---- ---------

ServiceAccount pvviewer default

#pod 생성

k run pvviewer --image=redis --dry-run -o yaml > pvviewer.yaml

#cat pvviewer.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pvviewer

name: pvviewer

spec:

serviceAccountName: pvviewer #>>>>>>>>>>>>>>>>>serviceaccount 추가함

containers:

- image: redis

name: pvviewer

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

#apply

k apply -f pvviewer.yaml

▶ 검증

#describe

k describe pod pvviewer

#k describe pod pvviewer | grep -i service

#Service Account: pvviewer

# /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-45j4l (ro)

02. List the InternalIP of all nodes of the cluster. Save the result to a file /root/CKA/node_ips.

Answer should be in the format: InternalIP of controlplane<space>InternalIP of node01 (in a single line)

문제 유형 : 노드의 InternalIP열거하는 파일 저장

▶ 과정

#노드의 InternalIP확인

k get no -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

controlplane Ready control-plane 46m v1.29.0 192.3.246.12 <none> Ubuntu 22.04.4 LTS 5.4.0-1106-gcp containerd://1.6.26

node01 Ready <none> 45m v1.29.0 192.3.246.3 <none> Ubuntu 22.04.3 LTS 5.4.0-1106-gcp containerd://1.6.26

#json 형식으로 확인

k get no -o json

#jq를 사용하여 보기 편하게 + 더보기

k get no -o json | jq | more

#내부 ip 결과만 grep

k get no -o json | jq | grep -i internalip

k get no -o json | jq | grep -i internalip -B 100

#각 노드 정보의 json구조

k get no -o json | jq -c 'paths'

k get no -o json | jq -c 'paths' | grep type

k get no -o json | jq -c 'paths' | grep type | grep -v conditions

#node1의 cluster InternalIP주소 정보가져오기

k get no -o jsonpath='{.items}' | jq

k get no -o jsonpath='{.items[0].status.addresses}' | jq

k get no -o jsonpath='{.items[0].status.addresses[?(@.type=="InternalIP")]}' | jq

#{

# "address": "192.3.246.12",

# "type": "InternalIP"

#}

#node1의 주소만 가져오기

k get no -o jsonpath='{.items[0].status.addresses[?(@.type=="InternalIP")].address}'

#192.3.246.12

#모든 노드의 주소 가져오기

k get no -o jsonpath='{.items[*].status.addresses[?(@.type=="InternalIP")].address}'

#192.3.246.12 192.3.246.3

#파일에 저장

k get no -o jsonpath='{.items[*].status.addresses[?(@.type=="InternalIP")].address}' > /root/CKA/node_ips

▶ 검증

cat /root/CKA/node_ips

03. Create a pod called multi-pod with two containers.

Container 1: name: alpha, image: nginx

Container 2: name: beta, image: busybox, command: sleep 4800

Environment Variables:

container 1:

name: alpha

Container 2:

name: beta

문제 유형 : 멀티파드 생성

▶ 과정

#yaml 파일 생성 및 수정

k run multi-pod --image=nginx --dry-run=client -o yaml > multipod.yaml

vi multipod

#apiVersion: v1

#kind: Pod

#metadata:

# creationTimestamp: null

# labels:

# run: multi-pod

# name: multi-pod

#spec:

# containers:

# - image: nginx

# name: alpha

# env:

# - name: "name"

# value: "alpha"

# - image: busybox

# name: beta

# command:

# - sleep

# - "4800"

# env:

# - name: "name"

# value: "beta"

# resources: {}

# dnsPolicy: ClusterFirst

# restartPolicy: Always

#status: {}

▶ 검증

controlplane ~ ➜ k get pod

NAME READY STATUS RESTARTS AGE

multi-pod 2/2 Running 0 9s

04. Create a Pod called non-root-pod , image: redis:alpine

runAsUser: 1000

fsGroup: 2000

문제 유형 : 파드 생성

▶ 과정

k run non-root-pod --image=redis:alpine --dry-run=client -o yaml > non-root-pod.yaml

#kubernetes.io/docs에서 runsAsUser라고 검색하면 첫번째문서에서 security context라고 설명해줌

cat non-root-pod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: non-root-pod

name: non-root-pod

spec:

securityContext: # 보안 컨텍스트 추가

runAsUser: 1000 # 유저 ID 설정

fsGroup: 2000

containers:

- image: redis:alpine

name: non-root-pod

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

k apply -f non-root-pod.yaml

▶ 검증

k describe pod non-root-pod | grep image

k get pod non-root-pod -o yaml

spec:

containers:

- image: redis:alpine

imagePullPolicy: IfNotPresent

name: non-root-pod

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: kube-api-access-7rzgx

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: node01

preemptionPolicy: PreemptLowerPriority

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

fsGroup: 2000

runAsUser: 1000

05. We have deployed a new pod called np-test-1 and a service called np-test-service. Incoming connections to this service are not working. Troubleshoot and fix it.

Create NetworkPolicy, by the name ingress-to-nptest that allows incoming connections to the service over port 80.

Important: Don't delete any current objects deployed.

문제 유형 : 트러블슈팅 + NetworkPolicy

▶ 과정

k get svc

k get pod

#k run curl --image=alpine/curl --rm -it --sh

#networkpolicy.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: ingress-to-nptest

namespace: default

spec:

podSelector:

matchLabels:

run: np-test-1

policyTypes:

- Ingress

ingress:

ports:

- protocol: TCP

port: 80

k apply -f networkpolicy.yaml

▶ 검증

k get networkpolicy

06. Taint the worker node node01 to be Unschedulable. Once done, create a pod called dev-redis, image redis:alpine, to ensure workloads are not scheduled to this worker node. Finally, create a new pod called prod-redis and image: redis:alpine with toleration to be scheduled on node01.

key: env_type,

value: production,

operator: Equal

and effect: NoSchedule

문제 유형 : Unschedulable

▶ 과정

k taint --help

#목록 보기

k taint nodes node01 env_type=production:NoSchedule

#pod생성

k describe node node01

#Taints: env_type=production:NoSchedule

k run dev-redis --image=redis:alpine

k get pod -o wide

k run prod-redis --image=redis:alpine --dry-run=client -o yaml > prod-redis.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: prod-redis

name: prod-redis

spec:

tolerations:

- key: "env_type"

operator: "Equal"

value: "production"

effect: "NoSchedule"

containers:

- image: redis:alpine

name: prod-redis

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

k apply -f prod-redis.yaml

▶ 검증

k describe pod prod-redis

#Tolerations: env_type=production:NoSchedule

07. Create a pod called hr-pod in hr namespace belonging to the production environment and frontend tier .

image: redis:alpine

Use appropriate labels and create all the required objects if it does not exist in the system already.

문제 유형 : pod생성 + 해당 Namespace

▶ 과정

k get ns

#namespace만들기

k create ns hr

k run hr-pod --image=redis:alpine -n hr --labels="environment=production,tier=frontend"

▶ 검증

k get pod

NAME READY STATUS RESTARTS AGE

dev-redis 1/1 Running 0 15m

np-test-1 1/1 Running 0 26m

prod-redis 1/1 Running 0 10m

k get pod -n hr

NAME READY STATUS RESTARTS AGE

hr-pod 1/1 Running 0 18s

08. A kubeconfig file called super.kubeconfig has been created under /root/CKA. There is something wrong with the configuration. Troubleshoot and fix it.

문제 유형 : 트러블 슈팅 + kubeconfig

▶ 과정

#기본경로에 없는 kubeconfig파일을 명시하고 싶으면 kubeconfig와 그경로를 대시하면 됨

k get nodes --kubeconfig /root/CKA/super.kubeconfig

E0513 08:49:02.259532 13958 memcache.go:265] couldn't get current server API group list: Get "https://controlplane:9999/api?timeout=32s": dial tcp 192.13.7.11:9999: connect: connection refused

E0513 08:49:02.260045 13958 memcache.go:265] couldn't get current server API group list: Get "https://controlplane:9999/api?timeout=32s": dial tcp 192.13.7.11:9999: connect: connection refused

E0513 08:49:02.261606 13958 memcache.go:265] couldn't get current server API group list: Get "https://controlplane:9999/api?timeout=32s": dial tcp 192.13.7.11:9999: connect: connection refused

E0513 08:49:02.261972 13958 memcache.go:265] couldn't get current server API group list: Get "https://controlplane:9999/api?timeout=32s": dial tcp 192.13.7.11:9999: connect: connection refused

E0513 08:49:02.263517 13958 memcache.go:265] couldn't get current server API group list: Get "https://controlplane:9999/api?timeout=32s": dial tcp 192.13.7.11:9999: connect: connection refused

The connection to the server controlplane:9999 was refused - did you specify the right host or port?

#maybe 9999가 에러인듯

#default port가 뭔지 보자

#6443으로 수정

vi /root/CKA/super.kubeconfig

k get nodes --kubeconfig /root/CKA/super.kubeconfig

NAME STATUS ROLES AGE VERSION

controlplane Ready control-plane 50m v1.29.0

node01 Ready <none> 49m v1.29.0

▶ 검증

k get nodes --kubeconfig /root/CKA/super.kubeconfig

NAME STATUS ROLES AGE VERSION

controlplane Ready control-plane 50m v1.29.0

node01 Ready <none> 49m v1.29.0

09. We have created a new deployment called nginx-deploy. scale the deployment to 3 replicas. Has the replica's increased? Troubleshoot the issue and fix it.

문제 유형 : 트러블 슈팅 + replica

▶ 과정

#1. pod scale deployment

#2. nginx deployment

#3. replica=3

k get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 1/1 1 1 15m

controlplane ~ ➜ k scale deployment nginx-deploy --replicas=3

deployment.apps/nginx-deploy scaled

controlplane ~ ➜ k get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 1/3 1 1 16m

#컨트롤러 관리자에서 확인

k get pods -n kube-system

k get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-69f9c977-7hmcl 1/1 Running 0 60m

coredns-69f9c977-czn9n 1/1 Running 0 60m

etcd-controlplane 1/1 Running 0 61m

kube-apiserver-controlplane 1/1 Running 0 61m

kube-contro1ler-manager-controlplane 0/1 ImagePullBackOff 0 10m

kube-proxy-ltffz 1/1 Running 0 60m

kube-proxy-lzsjh 1/1 Running 0 60m

kube-scheduler-controlplane 1/1 Running 0 61m

weave-net-899gs 2/2 Running 0 60m

weave-net-m96kj 2/2 Running 1 (60m ago) 60m

cd /etc/

controlplane ~ ➜ cd /etc/kubernetes/manifests/

controlplane /etc/kubernetes/manifests ➜ ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

controlplane /etc/kubernetes/manifests ➜ vi kube-

controlplane /etc/kubernetes/manifests ➜ vi kube-controller-manager.yaml

controlplane /etc/kubernetes/manifests ➜ vi kube-controller-manager.yaml

controlplane /etc/kubernetes/manifests ➜ k get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-69f9c977-7hmcl 1/1 Running 0 64m

coredns-69f9c977-czn9n 1/1 Running 0 64m

etcd-controlplane 1/1 Running 0 64m

kube-apiserver-controlplane 1/1 Running 0 64m

kube-controller-manager-controlplane 0/1 Running 0 6s

kube-proxy-ltffz 1/1 Running 0 64m

kube-proxy-lzsjh 1/1 Running 0 63m

kube-scheduler-controlplane 1/1 Running 0 64m

weave-net-899gs 2/2 Running 0 63m

weave-net-m96kj 2/2 Running 1 (64m ago) 64m

controlplane /etc/kubernetes/manifests ➜ k get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 21m

▶ 검증

controlplane /etc/kubernetes/manifests ➜ k get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 21m

'Cloud > CKA' 카테고리의 다른 글

| [CKA] Mock Test - 사용자 생성 및 롤 바인딩 ( CSR , Rolebinding ) (0) | 2024.06.25 |

|---|---|

| Mock test Study Logs (1) | 2024.06.10 |

| Mock Test 2 (0) | 2024.05.06 |

| Mock Test 1 (0) | 2024.04.29 |

| Practice Tests - 1. Core Concepts (0) | 2024.02.18 |