[CKA] killer.sh ( Question 1 ~ Question 10 )

Ver. 240629

*주의*

- killer.sh 문제풀이 정리용

- Use context : ~ 조건은 적용하지 않았음

(실습환경에는 1개의 마스터노드와 2개의 워커노드가 있는 1개의 클러스터 환경에서 작업

control-plane은 master라고 naming되어 있음)

- 오류 있을 수 있음

Qusestion 1 | Contexts

You have access to multiple clusters from your main terminal through kubectl contexts. Write all those context names into /opt/course/1/contexts.

Next write a command to display the current context into /opt/course/1/context_default_kubectl.sh, the command should use kubectl.

Finally write a second command doing the same thing into /opt/course/1/context_default_no_kubectl.sh, but without the use of kubectl.

Answer:

//alias k='kubectl'

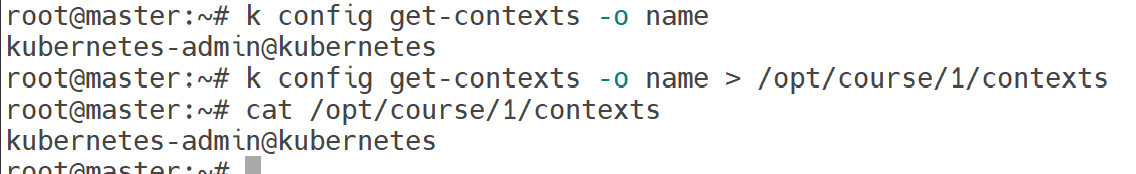

k config get-contexts -o name > /opt/course/1/contexts

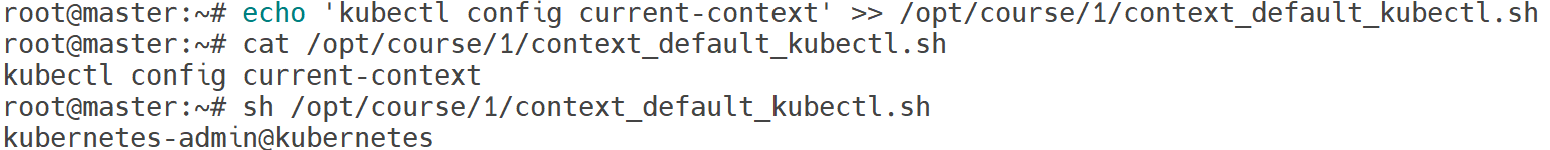

echo 'kubectl config current-context' >> /opt/course/1/context_default_kubectl.sh

sh /opt/course/1/context_default_kubectl.sh

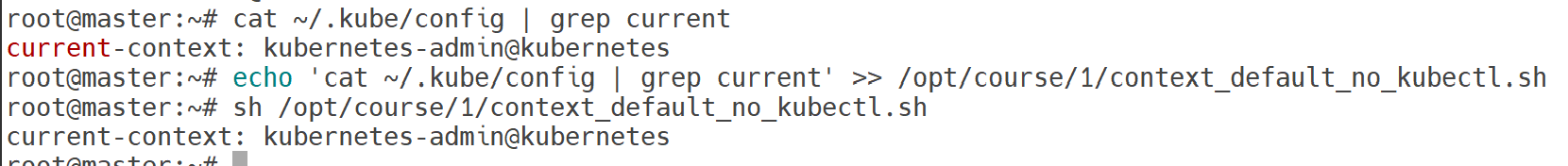

echo 'cat ~/.kube/config | grep current' >> /opt/course/1/context_default_no_kubectl.sh

sh /opt/course/1/context_default_no_kubectl.sh

https://kubernetes.io/docs/reference/kubectl/quick-reference/

kubectl Quick Reference

This page contains a list of commonly used kubectl commands and flags. Note:These instructions are for Kubernetes v1.30. To check the version, use the kubectl version command. Kubectl autocomplete BASH source <(kubectl completion bash) # set up autocomplet

kubernetes.io

Qusestion 2 | Schedule Pod on Controlplane Nodes

Use context: kubectl config use-context k8s-c1-H

Create a single Pod of image httpd:2.4.41-alpine in Namespace default. The Pod should be named pod1 and the container should be named pod1-container. This Pod should only be scheduled on controlplane nodes. Do not add new labels to any nodes.

Answer:

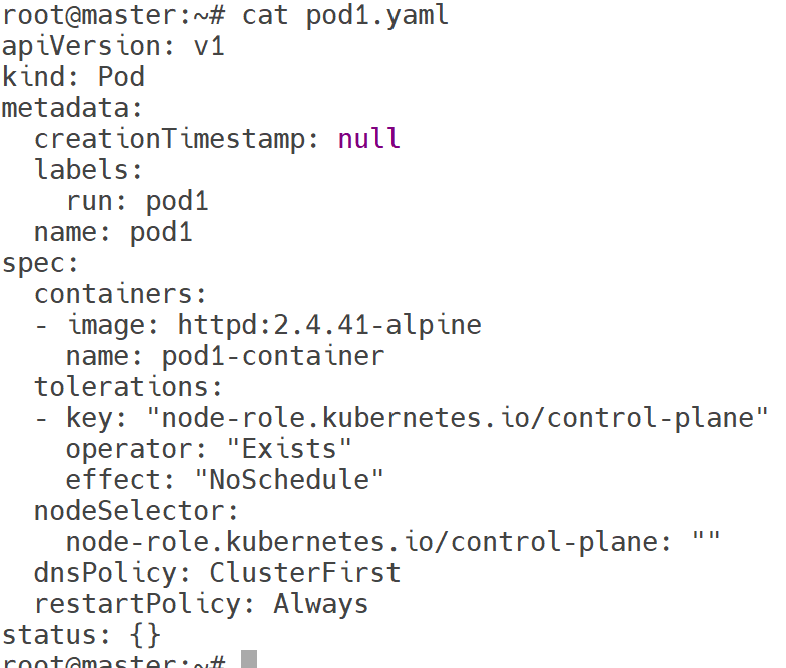

k run pod1 --image=httpd:2.4.41-alpine --dry-run=client -o yaml

k get node master --show-labels | grep control-plane

//vi pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

containers:

- image: httpd:2.4.41-alpine

name: pod1-container

tolerations:

- key: "node-role.kubernetes.io/control-plane"

operator: "Exists"

effect: "NoSchedule"

nodeSelector:

node-role.kubernetes.io/control-plane: ""

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

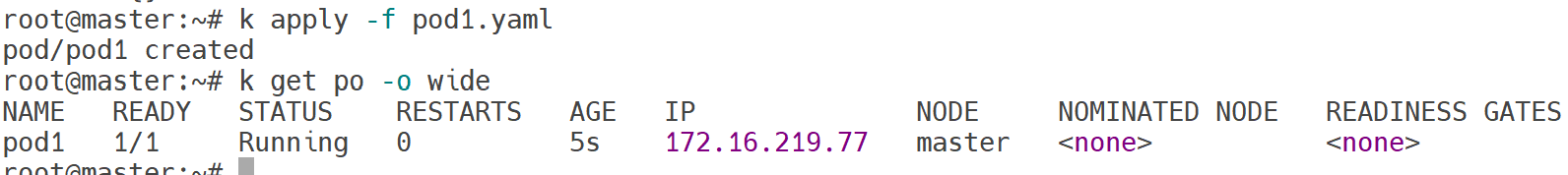

k apply -f pod1.yaml

k get po -o wide

https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/

Taints and Tolerations

Node affinity is a property of Pods that attracts them to a set of nodes (either as a preference or a hard requirement). Taints are the opposite -- they allow a node to repel a set of pods. Tolerations are applied to pods. Tolerations allow the scheduler t

kubernetes.io

https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/

Assigning Pods to Nodes

You can constrain a Pod so that it is restricted to run on particular node(s), or to prefer to run on particular nodes. There are several ways to do this and the recommended approaches all use label selectors to facilitate the selection. Often, you do not

kubernetes.io

https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity

Assigning Pods to Nodes

You can constrain a Pod so that it is restricted to run on particular node(s), or to prefer to run on particular nodes. There are several ways to do this and the recommended approaches all use label selectors to facilitate the selection. Often, you do not

kubernetes.io

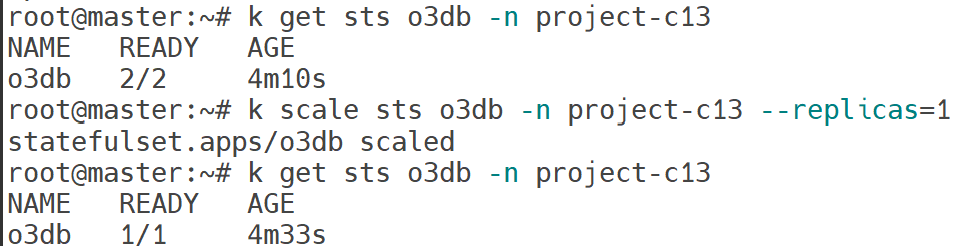

Qusestion 3 | Scale down StatefulSet

Use context: kubectl config use-context k8s-c1-H

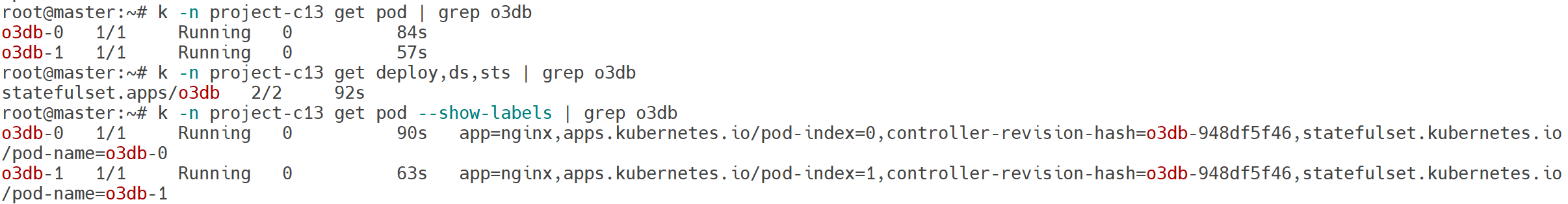

There are two Pods named o3db-* in Namespace project-c13. C13 management asked you to scale the Pods down to one replica to save resources.

Answer:

k scale sts o3db -n project-c13 --replicas=1

https://kubernetes.io/docs/tasks/run-application/scale-stateful-set/

Scale a StatefulSet

This task shows how to scale a StatefulSet. Scaling a StatefulSet refers to increasing or decreasing the number of replicas. Before you begin StatefulSets are only available in Kubernetes version 1.5 or later. To check your version of Kubernetes, run kubec

kubernetes.io

Qusestion 4 | Pod Ready if Service is reachable

Use context: kubectl config use-context k8s-c1-H

Do the following in Namespace default.

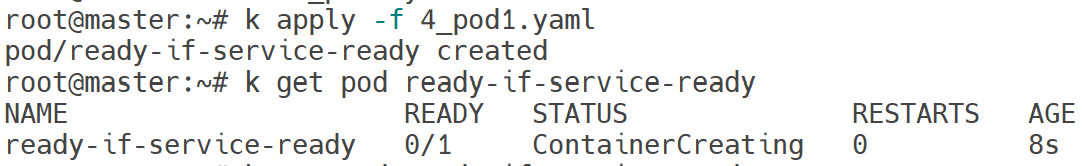

Create a single Pod named ready-if-service-ready of image nginx:1.16.1-alpine.

Configure a LivenessProbe which simply executes command true.

Also configure a ReadinessProbe which does check if the url http://service-am-i-ready:80 is reachable, you can use wget -T2 -O- http://service-am-i-ready:80 for this.

Start the Pod and confirm it isn't ready because of the ReadinessProbe.

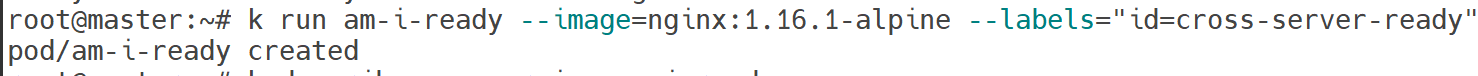

Create a second Pod named am-i-ready of image nginx:1.16.1-alpine with label id: cross-server-ready.

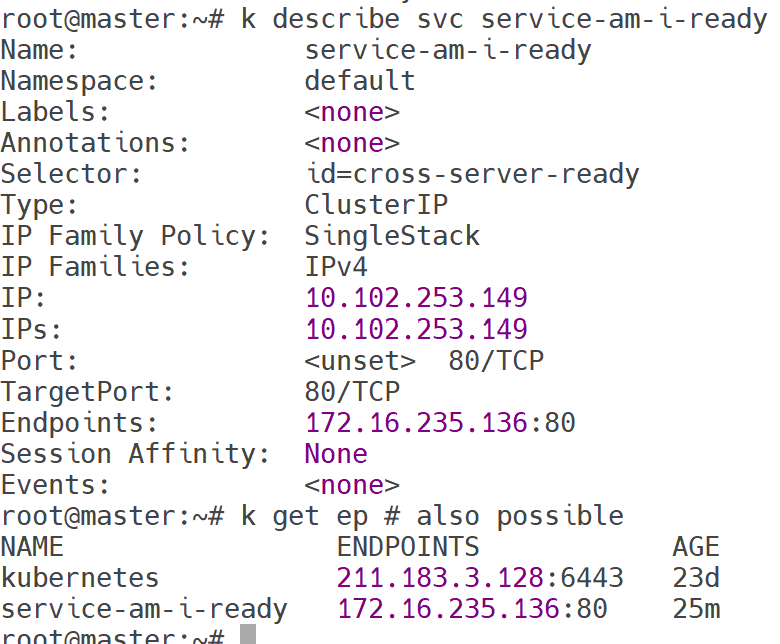

The already existing Service service-am-i-ready should now have that second Pod as endpoint.

Now the first Pod should be in ready state, confirm that.

Answer:

4_pod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: ready-if-service-ready

name: ready-if-service-ready

spec:

containers:

- image: nginx:1.16.1-alpine

name: ready-if-service-ready

resources: {}

livenessProbe: # add from here

exec:

command:

- 'true'

readinessProbe:

exec:

command:

- sh

- -c

- 'wget -T2 -O- http://service-am-i-ready:80' # to here

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

k run am-i-ready --image=nginx:1.16.1-alpine --labels="id=cross-server-ready"

k get pod ready-if-service-ready

Configure Liveness, Readiness and Startup Probes

This page shows how to configure liveness, readiness and startup probes for containers. The kubelet uses liveness probes to know when to restart a container. For example, liveness probes could catch a deadlock, where an application is running, but unable t

kubernetes.io

Qusestion 5 | Kubectl sorting

Use context: kubectl config use-context k8s-c1-H

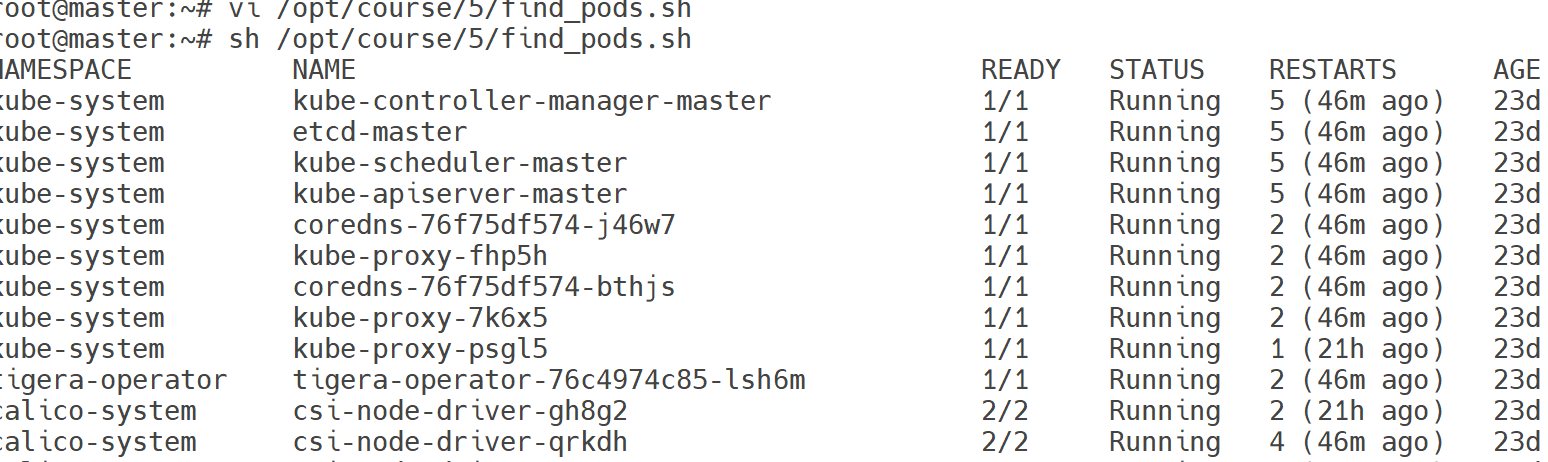

There are various Pods in all namespaces. Write a command into /opt/course/5/find_pods.sh which lists all Pods sorted by their AGE (metadata.creationTimestamp).

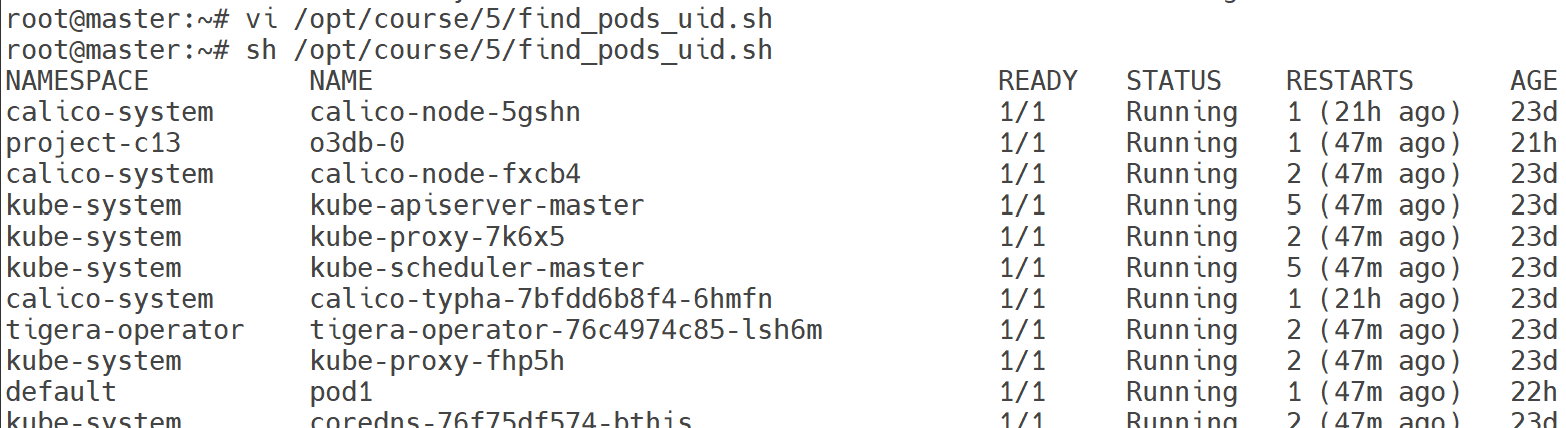

Write a second command into /opt/course/5/find_pods_uid.sh which lists all Pods sorted by field metadata.uid. Use kubectl sorting for both commands.

Answer:

# /opt/course/5/find_pods.sh

kubectl get pod -A --sort-by=.metadata.creationTimestamp

# /opt/course/5/find_pods_uid.sh

kubectl get pod -A --sort-by=.metadata.uid

https://kubernetes.io/pt-br/docs/reference/kubectl/cheatsheet/

kubectl Cheat Sheet

Esta página contém uma lista de comandos kubectl e flags frequentemente usados. Kubectl Autocomplete BASH source <(kubectl completion bash) # configuração de autocomplete no bash do shell atual, o pacote bash-completion precisa ter sido instalado prime

kubernetes.io

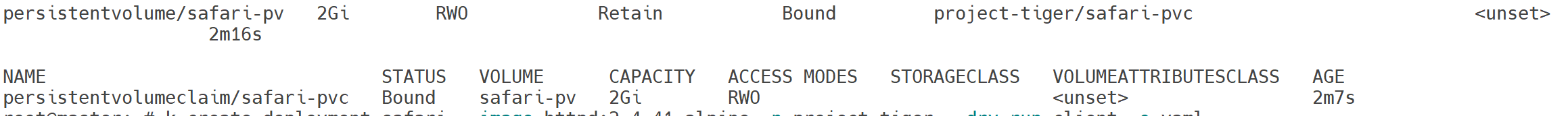

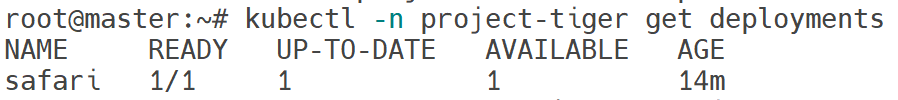

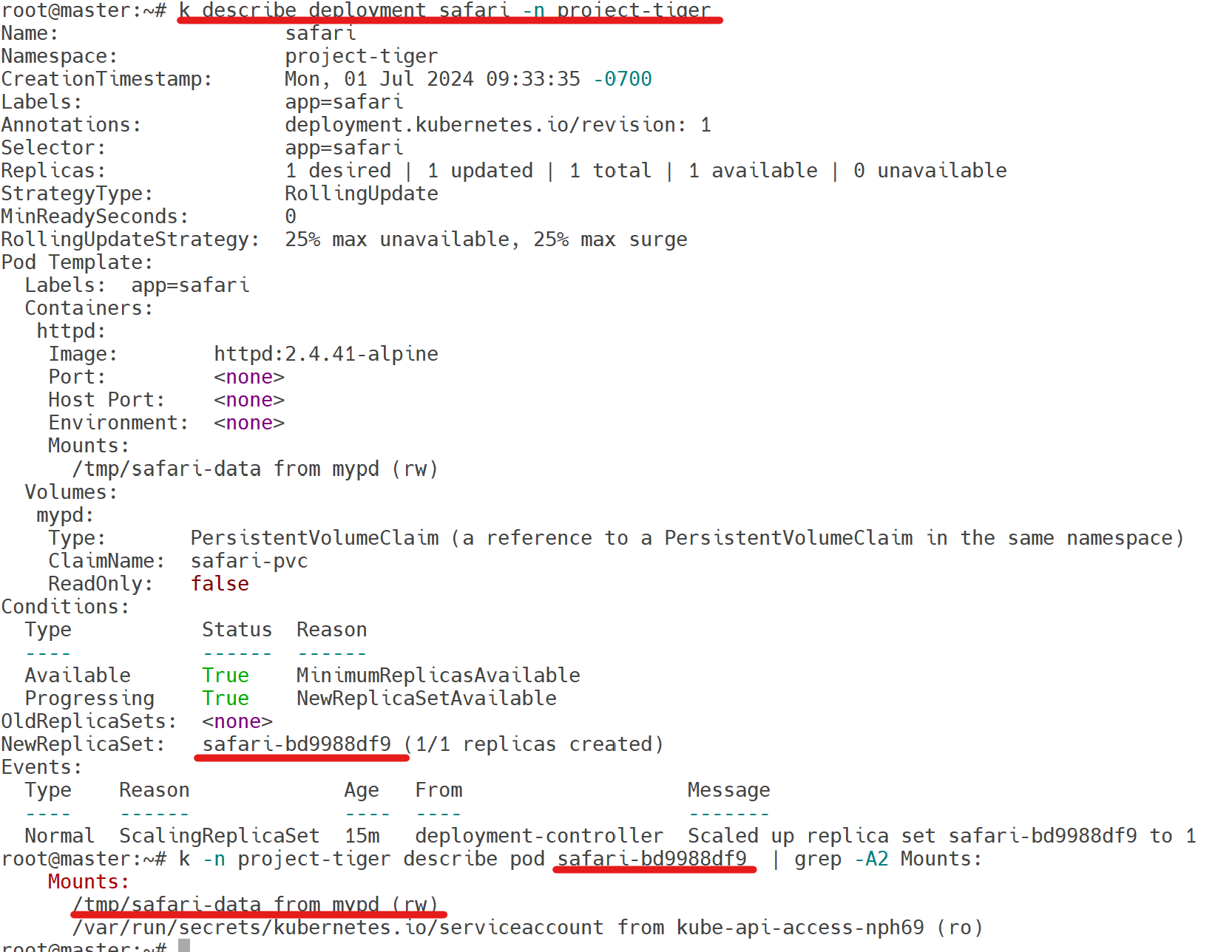

Qusestion 6 | Storage, PV, PVC, Pod volume

Use context: kubectl config use-context k8s-c1-H

Create a new PersistentVolume named safari-pv. It should have a capacity of 2Gi, accessMode ReadWriteOnce, hostPath /Volumes/Data and no storageClassName defined.

Next create a new PersistentVolumeClaim in Namespace project-tiger named safari-pvc . It should request 2Gi storage, accessMode ReadWriteOnce and should not define a storageClassName. The PVC should bound to the PV correctly.

Finally create a new Deployment safari in Namespace project-tiger which mounts that volume at /tmp/safari-data. The Pods of that Deployment should be of image httpd:2.4.41-alpine.

Answer:

//cat 6_pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: safari-pv

spec:

hostPath:

path: /Volumes/Data

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

//cat 6_pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: safari-pvc

namespace: project-tiger

spec:

accessModes:

- ReadWriteOnce

volumeMode: Filesystem

resources:

requests:

storage: 2Gi

//cat 6_deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: safari

name: safari

namespace: project-tiger

spec:

replicas: 1

selector:

matchLabels:

app: safari

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: safari

spec:

containers:

- image: httpd:2.4.41-alpine

name: httpd

volumeMounts:

- mountPath: "/tmp/safari-data"

name: mypd

volumes:

- name: mypd

persistentVolumeClaim:

claimName: safari-pvc

status: {}

https://kubernetes.io/docs/concepts/storage/persistent-volumes/#claims-as-volumes

Persistent Volumes

This document describes persistent volumes in Kubernetes. Familiarity with volumes, StorageClasses and VolumeAttributesClasses is suggested. Introduction Managing storage is a distinct problem from managing compute instances. The PersistentVolume subsystem

kubernetes.io

Qusestion 7 | Node and Pod Resource Usage

Use context: kubectl config use-context k8s-c1-H

The metrics-server has been installed in the cluster. Your college would like to know the kubectl commands to:

1. show Nodes resource usage

2. show Pods and their containers resource usage

Please write the commands into /opt/course/7/node.sh and /opt/course/7/pod.sh.

Answer:

# /opt/course/7/node.sh

kubectl top node

# /opt/course/7/pod.sh

kubectl top pod --containers=truemeric server는 kubernetes의 공식 애드온임. 설치는 curl raw 주소 넣어서 설치함

https://kubernetes.io/docs/reference/kubectl/generated/kubectl_top/

kubectl top

Production-Grade Container Orchestration

kubernetes.io

- metric server

https://kubernetes.io/docs/tasks/debug/debug-cluster/resource-metrics-pipeline/#metrics-api

Resource metrics pipeline

For Kubernetes, the Metrics API offers a basic set of metrics to support automatic scaling and similar use cases. This API makes information available about resource usage for node and pod, including metrics for CPU and memory. If you deploy the Metrics AP

kubernetes.io

Qusestion 8 | Get Controlplane Information

Use context: kubectl config use-context k8s-c1-H

Ssh into the controlplane node with ssh cluster1-controlplane1. Check how the controlplane components kubelet, kube-apiserver, kube-scheduler, kube-controller-manager and etcd are started/installed on the controlplane node. Also find out the name of the DNS application and how it's started/installed on the controlplane node.

Write your findings into file /opt/course/8/controlplane-components.txt. The file should be structured like:

# /opt/course/8/controlplane-components.txt kubelet: [TYPE] kube-apiserver: [TYPE] kube-scheduler: [TYPE] kube-controller-manager: [TYPE] etcd: [TYPE] dns: [TYPE] [NAME]Choices of [TYPE] are: not-installed, process, static-pod, pod

Answer:

ps aux | grep kubelet # shows kubelet process

find /usr/lib/systemd | grep kube

find /usr/lib/systemd | grep etcd

find /etc/kubernetes/manifests/

kubectl -n kube-system get pod -o wide | grep master

kubectl -n kube-system get ds

kubectl -n kube-system get deploy

# /opt/course/8/controlplane-components.txt

kubelet: process

kube-apiserver: static-pod

kube-scheduler: static-pod

kube-controller-manager: static-pod

etcd: static-pod

dns: pod coredns

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/kubelet-integration/

Configuring each kubelet in your cluster using kubeadm

Note: Dockershim has been removed from the Kubernetes project as of release 1.24. Read the Dockershim Removal FAQ for further details. FEATURE STATE: Kubernetes v1.11 [stable] The lifecycle of the kubeadm CLI tool is decoupled from the kubelet, which is a

kubernetes.io

★

Qusestion 9 | Kill Scheduler, Manual Scheduling

Use context: kubectl config use-context k8s-c2-AC

Ssh into the controlplane node with ssh cluster2-controlplane1. Temporarily stop the kube-scheduler, this means in a way that you can start it again afterwards.

Create a single Pod named manual-schedule of image httpd:2.4-alpine, confirm it's created but not scheduled on any node.

Now you're the scheduler and have all its power, manually schedule that Pod on node cluster2-controlplane1. Make sure it's running.

Start the kube-scheduler again and confirm it's running correctly by creating a second Pod named manual-schedule2 of image httpd:2.4-alpine and check if it's running on cluster2-node1.

Answer:

kubectl -n kube-system get pod | grep schedule

cd /etc/kubernetes/manifests/

mv kube-scheduler.yaml ..

//kube-scsheduler stoppedpod pending

k run manual-schedule --image=httpd:2.4-alpine

//9.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: "2020-09-04T15:51:02Z"

labels:

run: manual-schedule

managedFields:

...

manager: kubectl-run

operation: Update

time: "2020-09-04T15:51:02Z"

name: manual-schedule

namespace: default

resourceVersion: "3515"

selfLink: /api/v1/namespaces/default/pods/manual-schedule

uid: 8e9d2532-4779-4e63-b5af-feb82c74a935

spec:

nodeName: cluster2-controlplane1 # add the controlplane node name

containers:

- image: httpd:2.4-alpine

imagePullPolicy: IfNotPresent

name: manual-schedule

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-nxnc7

readOnly: true

dnsPolicy: ClusterFirst

...

k -f 9.yaml replace --forcepod running

cd /etc/kubernetes/manifests/

mv ../kube-scheduler.yaml .

kubectl -n kube-system get pod | grep schedule //running

k run manual-schedule2 --image=httpd:2.4-alpine

k get pod -o wide | grep schedule

//manual-schedule 1/1 Running ... cluster2-controlplane1

//manual-schedule2 1/1 Running ... cluster2-node1

https://kubernetes.io/docs/concepts/scheduling-eviction/kube-scheduler/

Kubernetes Scheduler

In Kubernetes, scheduling refers to making sure that Pods are matched to Nodes so that Kubelet can run them. Scheduling overview A scheduler watches for newly created Pods that have no Node assigned. For every Pod that the scheduler discovers, the schedule

kubernetes.io

Qusestion 10 | RBAC ServiceAccount Role RoleBinding

Use context: kubectl config use-context k8s-c1-H

Create a new ServiceAccount processor in Namespace project-hamster. Create a Role and RoleBinding, both named processor as well. These should allow the new SA to only create Secrets and ConfigMaps in that Namespace.

Answer:

k -n project-hamster create sa processor

k -n project-hamster create role processor \

--verb=create \

--resource=secret \

--resource=configmap

k -n project-hamster create rolebinding processor \

--role processor \

--serviceaccount project-hamster:processor

result

➜ k -n project-hamster auth can-i create secret \

--as system:serviceaccount:project-hamster:processor

yes

➜ k -n project-hamster auth can-i create configmap \

--as system:serviceaccount:project-hamster:processor

yes

➜ k -n project-hamster auth can-i create pod \

--as system:serviceaccount:project-hamster:processor

no

➜ k -n project-hamster auth can-i delete secret \

--as system:serviceaccount:project-hamster:processor

no

➜ k -n project-hamster auth can-i get configmap \

--as system:serviceaccount:project-hamster:processor

nohttps://kubernetes.io/docs/reference/access-authn-authz/rbac/

Using RBAC Authorization

Role-based access control (RBAC) is a method of regulating access to computer or network resources based on the roles of individual users within your organization. RBAC authorization uses the rbac.authorization.k8s.io API group to drive authorization decis

kubernetes.io